TIME AND PLACE

Lectures: M W 4:30-6:00pm (schedule on Penn InTouch will be updated)

Location: TBA

UNITS: 1.0 CU

INSTRUCTOR

Jing (Jane) Li (janeli@seas.upenn.edu)

Office: Levine 274

Office Hours: 2pm-3pm W

TEACHING ASSISTANTS

- Jialiang Zhang (jlzhang@seas.upenn.edu)

- Nick Beckwith (nickbeck@seas.upenn.edu)

COURSE OVERVIEW

Machine learning (ML) techniques are enjoying rapidly increasing adoption in our daily life, due to the synergistic advancements across data, algorithm, and hardware. However, designing and implementing systems that can efficiently support ML models across various deployment scenarios from edge to cloud remains a significant obstacle, in large part due to the gap between machine learning’s promise (core ML algorithm and method) and its real-world utility (diverse and heterogeneous computing platforms).

The course is designed to introduce a new research area at the intersection of machine learning and hardware systems to bridge the gap. The covered topics include basics of deep learning, deep learning frameworks, deep learning on contemporary computing platforms (CPU, GPU, FPGA) and programmable accelerators (TPU), performance measures, numerical representation and customized data types for deep learning, co-optimization of deep learning algorithms and hardware, training for deep learning and support for complex deep learning models. The course is structured with a combination of lectures, labs, research paper reading/in-class discussion, a final project and guest lectures with state-of-the-art industry practices (Amazon, Facebook, Google, Intel, Microsoft, and Xilinx). The goal is to help students to 1) gain hands-on experiences on deploying deep learning models on CPU, GPU and FPGA; 2) develop the intuition on how to perform close-loop co-design of algorithm and hardware through various engineering knobs such as algorithmic transformation, data layout, numerical precision, data reuse, and parallelism for performance optimization given target accuracy metrics, 3) understand future trends and opportunities at the intersection of ML and computer system fields. 4) (For CIS or ML students), gain necessary computer hardware knowledge for algorithm-level optimizations.

PREREQUISITES

CIS 240, or equivalent

Proficiency in programming: ENGR105, CIS110, CIS120, or equivalent. Lab assignments in

this course will be based in PyTorch (CPU, GPU) and OpenCL (FPGA).

(Note that CIS 371 is not officially required but helpful)

Undergraduates: Permission of the instructor is required to enroll in this class. If you are unsure whether your background is sufficient for this class, please talk to/email the instructor.

GRADING POLICY

Lab Assignments = 40%

Final Project = 50%

Reading = 10%

Late policy: Each student will have 5 free “late days” to use during the semester. You can use these late days to submit lab/project after the due date without any penalty. Assignments that are submitted late, after exhausting the quota of late days will result in 50% credit deducted per day, i.e., zero credit after 2 late days. Do not exhaust all the late days on the first lab.

Collaboration policy: Study groups are allowed, and students may discuss in groups. However, we expect students to understand and complete their own lab assignments. Each student must conduct the lab independently and hand in one lab assignment per student. For the final project, students are expected to work in groups (2 students per group). Each team should turn in one final project report. In the project report, please write down each team member’s specific contribution.

Reading assignment turn-in: The paper review will be turned in via Google form by 3:00pm before lecture (link will be posted on the canvas website). Lab/project assignment turn-in: Lab and project reports will be turned in electronically through the Penn Canvas website. Log in to Canvas with your PennKey and password, then select ESE 680 from the Courses and Groups dropdown menu. Submission should be as a single file (preferably .pdf).

CLASS HOMEPAGE:

TBA (a Canvas website will be provided)

Piazza will be used for discussions and clarifications.

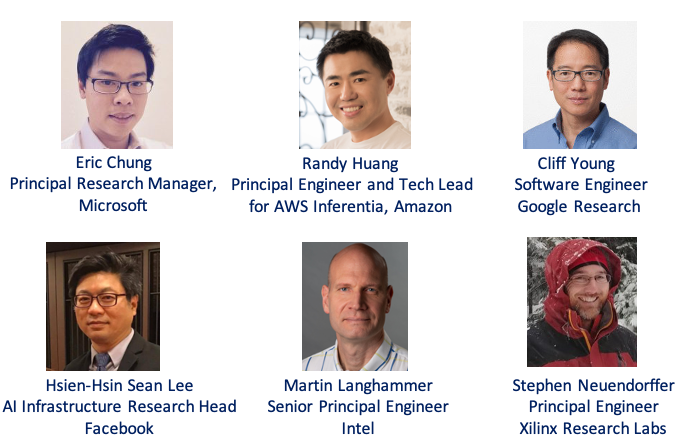

INVITED SPEAKERS

CLASS SCHEDULE (TENTATIVE)

| Date | Topic | Course Content | Notes/Assignment Due |

|---|---|---|---|

| 09/02 | Class Introduction | 09/07 no class | |

| 09/09 | Introduction to Deep Learning | Model, Dataset, Cost (loss) function, Optimization | |

| 09/14 | Deep Neural Network Architecture | Kernel Computation (Inference), AlexNet, VGG, GoogLeNet, ResNet | |

| 09/16 | Deep Learning System: Hardware and Software | CPU, GPU, FPGA, TPU, PyTorch, ONNX, MLPerf | |

| 09/21 | PyTorch Tutorial | Lab 1 (due 9/28) | |

| 09/23 | FPGA fudementals | ||

| 09/28 | Guest Lecture (Cliff Young, Google) | Neural Networks Have Rebooted Computer Architecture: What Should We Reboot Next? | Lab 2 (due 10/07) |

| 09/30 | Parellelism | Data/Model/Pipeline Parellelism, ILP, DLP, TLP | |

| 10/05 | Mapping and Scheduling I | Roofline, Parellelism/Data Reuse, Loop Unrolling/Order/Bound, Spatial/Temporal Choice | |

| 10/07 | OpenCL Tutorial | Lab 3 (due10/19) | |

| 10/12 | Mapping and Scheduling II | Auto Tuning, Optimization for specialized HW, Case studies | |

| 10/14 | Guest Lecture (Stephen Neuendorffer, Xilinx) | Optimizing data movement for Versal AI Engine | |

| 10/19 | Numerial Precision and Custom Data Type | INT, FP, Bfloat16, MS-FP, TF32, DLFloat16, Quantization Process (Mapping/Scaling/Range Calibration) | Lab 4 (due 11/02) |

| 10/21 | Guest Lecture (Randy Huang, Amazon) | Accelerating the Pace of AWS Inferentia Chip Development: From Concept to End Customers Use | |

| 10/26 | Project Overview | Project release | |

| 10/28 | Guest Lecture (Eric Chung, Microsoft) | TBD | |

| 11/02 | Arithmetic Hardware | Complexity, Cost | |

| 11/04 | Guest Lecture (Martin Langhammer, Intel) | Low Precision Arithmetic in FPGAs | |

| 11/09 | Co-Design I | Dense transformation (Direct Conv, GEMM, FFT, Winograd) | |

| 11/11 | Co-Design II | Sparse transformation | |

| 11/16 | Co-Design III | Compact Models and NAS | |

| 11/18 | Guest Lecture (Hsien-Hsin Sean Lee, Facebook) | TBD | |

| 11/23 | Natural Language Processing | RNN, LSTM, Attention, Transformer | |

| 11/25 | Training Neural Network I | Backprop, Hyper-parameter | |

| 11/30 | Training Neural Network II | SGD variants, Kernel Computation (Training), Cost Analysis | |

| 12/02 | Training Neural Network III | Distributed Training | |

| 12/07 | Wrap up | ||

| 12/09 | Project Presentation | Project final report (due 12/15) |

READING:

We will assign one paper to read before each lecture (9/9-11/18). Several review questions will guide you through the paper reading process. In addition to the paper reading questions, we will also ask you to provide brief course feedback after each lecture to help us make fine-grained adjustment throughout the semester.

LAB AND PROJECT:

We have four labs (two software labs and two hardware labs) and 1 final project (software/hardware co-design). Lab 1 is a one-week assignment and Lab 2-4 are two-week assignments. Lab 1 and Lab 2 will teach students how to build deep neural network (DNN) models in PyTorch and perform workload analysis on CPU and GPU. These two labs will help students to get familiar with AWS computing environment and navigate the tools to find the performance bottlenecks when running DNN on different computing platforms. Lab 3 will teach student to implement a core architectural component on FPGA in OpenCL and get familiar with Xilinx Vitis unified software platform. Lab 4 consists of two parts: 1) performing software experiments in PyTorch to study the impact of low-precision arithmetic on inference accuracy and 2) performing hardware experiments in OpenCL to study the latency and the resource utilization of low-precision arithmetic hardware. The final project will be 1.5-month long (6 weeks). It requires the students to leverage the key learnings from the 4 labs and perform co-design on hardware and software using the techniques and design options introduced in the course to achieve an end-to-end implementation optimized for single-batch inference latency given an accuracy target.

COMPARISON TO ESE 532:

This course is designed to target broader audience (e.g., CIS or ML students) including but not limited to computer engineering. The course focuses on a specific application domain - deep learning and provides an in-depth coverage on various deep learning-specific topics e.g., numerical precision and customized data type and the consequent optimization opportunities in both hardware and algorithm, etc.. For non-computer engineering students, it provides necessary computer-related knowledge to help develop intuitions on how to design hardware-friendly algorithms. For computer engineering students, it provides an in-depth coverage on the state-of-the-art deep learning techniques (software and hardware). This course is not a pre-requisite for ESE 532 but it motivates and prepares computer engineering students before diving deep into the advanced topics covered in ESE 532.

COMPARISON TO ESE 546:

This course is more focused on the practical deployment of deep learning in various computing environment (phone, wearable, cloud and supercomputer) via the co-design of hardware and algorithm: 1) design hardware to better support the current and next generation of deep learning models and 2) design algorithms that are hardware friendly and can run efficiently on current and future systems. ESE 546 is more focused on the fundamental principles of deep learning and how to build/train deep neural networks. These two courses are complementary to each other.

ACADEMIC MISCONDUCT:

Please refer to Penn’s Code of Academic Integrity for more information.